If you wonder how to automate Ubuntu virtual machine creation and then deploy it to Proxmox in multple copies, then you are looking for Packer and Terraform.

Side note: going for virtual machines in Proxmox is the proper way. I tried for several days to have LXC containers working, however finally I will say that it is not the best option with lot of things going bad like cgroups, AppArmor, nesting, FUSE, ingress networking etc. There is literally too much to handle with LXC and with VM there is no such problem, so discussion end here in favour of Proxmox Qemu. Keep LXC contrainers for simple things.

Why to automate?

Because we can.

Because it is a better way of using our time.

Because it scales better.

Because it provides some form of self-documentation.

Why to use Proxmox and Ubuntu VM?

Ubuntu is a leading Linux distribution without licensing issues with 34% of Linux market share. It has strong user base. It is my personal preference also. It gives us ability to subscribe to Ubuntu Pro which comes with several compliance utilities.

And Proxmox/Qemu started being an enterprise class virtualization software package few years back and it is also a open source leading solutions in its field. In contains clustering features (including failover) as well as support for various storage types. Depending on a source it has around 1% of virtualization software market share.

Installation of Packer and Terraform

It is important to have both Packer and Terraform at its proper versions coming from official repositories. Moreover it is important that the exact way of building specific version of operating system differs from vesion to version, that is way the title of this article says 22.04-4 and not 22.04-3, because there might be some differences.

Install valid version of Packer. The version which come from Ubuntu packages it invalid and it does not contain ability to manage plugins, so be sure to install Packer with official repository.

curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

sudo apt-get update && sudo apt-get install packer

Install valid version of Terraform. Having issues with bundled version of Packer I decided to go for official way of installing at first try:

sudo apt-get update && sudo apt-get install -y gnupg software-properties-common

wget -O- https://apt.releases.hashicorp.com/gpg | \

gpg --dearmor | \

sudo tee /usr/share/keyrings/hashicorp-archive-keyring.gpg > /dev/null

gpg --no-default-keyring \

--keyring /usr/share/keyrings/hashicorp-archive-keyring.gpg \

--fingerprint

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] \

https://apt.releases.hashicorp.com $(lsb_release -cs) main" | \

sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update

sudo apt-get install terraform

Terraform Telmate/Proxmox

Important note regarding Terraform and its plugin for Proxmox. This plugin as well as Proxmox golang API is provided by a single company which Telmate LLC. This plugin has some compability issues and at the moment for Proxmox 7 I recommend using Telmate/proxmox version 2.9.0. The latest version which is 2.9.14 has some difficulties with handling cloud-init which leads to 50% chance of VM that requires manual drives reconfiguration. As for 2024/09/06 there is no stable 3.0.1 release.

If you happen to have the latest one and would like downgrade, then remove .terraform and .terraform.lock.hcl and then initialize once again with the following command:

terraform init

Generate Ubuntu 22.04-4 template for Proxmox with Packer

Starting from few versions back the Ubuntu project changed its way of automating installations. Instead of seeding you now have a autoinstall feature. Packer project structure contains few files, and I will start with ubuntu-22-template/http/user-data containing cloud-config:

#cloud-config

autoinstall:

version: 1

locale: en_US

ssh:

install-server: true

allow-pw: true

disable_root: true

ssh_quiet_keygen: true

allow_public_ssh_keys: true

packages:

- qemu-guest-agent

- sudo

storage:

layout:

name: lvm

sizing-policy: all

# password: xxx

user-data:

package_upgrade: false

timezone: Europe/Warsaw

users:

- name: temporary

groups: [sudo]

lock-passwd: false

sudo: ALL=(ALL) NOPASSWD:ALL

shell: /bin/bash

passwd: "here you place SHA512 generated hash of a password"

In order to turn LUKS on, uncomment storage.layout.password field and set desired password. users.passwd can be generated with mkpasswd using SHA-512. Next is ubuntu-22-template/files/99-pve.cfg:

datasource_list: [ConfigDrive, NoCloud]

Credentials get its own file (./credentials.pkr.hcl). You can of course place it directly into your file, however if you SCM those files it will be permament and shared with others, that is why you should separate this file and even do not include it into your commits:

proxmox_api_url = "https://192.168.2.10:8006/api2/json"

proxmox_api_token_id = "root@pam!root-token"

proxmox_api_token_secret = "your Proxmox token"

my_ssh_password = "your new VM SSH password"

Finally, there is ubuntu-22-template/ubuntu-22-raw.pkr.hcl file, where you define variables, source and build. We source ISO image and define Proxmox VE Qemu VM parameters. The most crucial and cryptic things is to provide valid boot_command. http* sections refers to your machine serving files over HTTP, ssh* section on the other hand refers to configuration relate to the remote machine (newly created VM on Proxmox). Our local machine acts as shell commands provider over HTTP which then being passed to remote machine are executed during system installation.

variable "proxmox_api_url" {

type = string

}

variable "proxmox_api_token_id" {

type = string

}

variable "proxmox_api_token_secret" {

type = string

sensitive = true

}

variable "my_ssh_password" {

type = string

sensitive = true

}

source "proxmox-iso" "ubuntu-server-jammy" {

proxmox_url = "${var.proxmox_api_url}"

username = "${var.proxmox_api_token_id}"

token = "${var.proxmox_api_token_secret}"

insecure_skip_tls_verify = true

node = "lab"

vm_id = "141"

vm_name = "z10-ubuntu-22-template-RAW"

template_description = "Ubuntu Server Raw Encrypted"

iso_file = "local:iso/ubuntu-22.04.4-live-server-amd64.iso"

iso_storage_pool = "local"

unmount_iso = true

qemu_agent = true

scsi_controller = "virtio-scsi-single"

disks {

disk_size = "10G"

format = "raw"

storage_pool = "vms1"

storage_pool_type = "directory"

type = "virtio"

}

cores = "2"

memory = "4096"

network_adapters {

model = "virtio"

bridge = "vmbr0"

firewall = "false"

}

cloud_init = true

cloud_init_storage_pool = "local"

boot_command = [

"<esc><wait>",

"e<wait>",

"<down><down><down><end>",

"<bs><bs><bs><bs><wait>",

"ip=${cidrhost("192.168.2.0/24", 100)}::${cidrhost("192.168.1.0/24", 1)}:${cidrnetmask("192.168.0.0/22")}::::${cidrhost("1.1.1.0/24", 1)}:${cidrhost("9.9.9.0/24", 9)} ",

"autoinstall ds=nocloud-net\\;s=http://{{ .HTTPIP }}:{{ .HTTPPort }}/ ---<wait>",

"<f10><wait>"

]

boot = "c"

boot_wait = "5s"

http_directory = "ubuntu-22-template/http"

http_bind_address = "IP of machine from which you will run Packer"

http_port_min = 8802

http_port_max = 8802

ssh_host = "192.168.2.100" # new VM proposed IP address

ssh_username = "temporary"

ssh_password = "${var.my_ssh_password}"

ssh_timeout = "20m"

}

build {

name = "ubuntu-server-jammy"

sources = ["proxmox-iso.ubuntu-server-jammy"]

provisioner "shell" {

inline = [

"while [ ! -f /var/lib/cloud/instance/boot-finished ]; do echo 'Waiting for cloud-init...'; sleep 1; done",

"sudo rm /etc/ssh/ssh_host_*",

"sudo truncate -s 0 /etc/machine-id",

"sudo apt -y autoremove --purge",

"sudo apt -y clean",

"sudo apt -y autoclean",

"sudo cloud-init clean",

"sudo rm -f /etc/cloud/cloud.cfg.d/subiquity-disable-cloudinit-networking.cfg",

"sudo rm -f /etc/netplan/00-installer-config.yaml",

"sudo sync"

]

}

provisioner "file" {

source = "ubuntu-22-template/files/99-pve.cfg"

destination = "/tmp/99-pve.cfg"

}

provisioner "shell" {

inline = [ "sudo cp /tmp/99-pve.cfg /etc/cloud/cloud.cfg.d/99-pve.cfg" ]

}

}

To run it:

packer build -var-file=credentials.pkr.hcl ubuntu-22-template/ubuntu-22-raw.pkr.hcl

The installation process is automated and you do not see usual configuration screens. Instead we provide autoinstall configuration and leave few options to be setup later during cloud-init, which is user details and network configuration details. This way we can achieve automation of deployments of such system, which will be show in a moment in Terraform section of this article.

A full overview of project structure is as follows:

├── credentials.pkr.hcl

├── main.tf

├── packer_cache

│ └── port

├── terraform.tfstate

├── terraform.tfstate.backup

└── ubuntu-22-template

├── files

│ └── 99-pve.cfg

├── http

│ ├── meta-data

│ └── user-data

└── ubuntu-22-raw.pkr.hcl

5 directories, 8 files

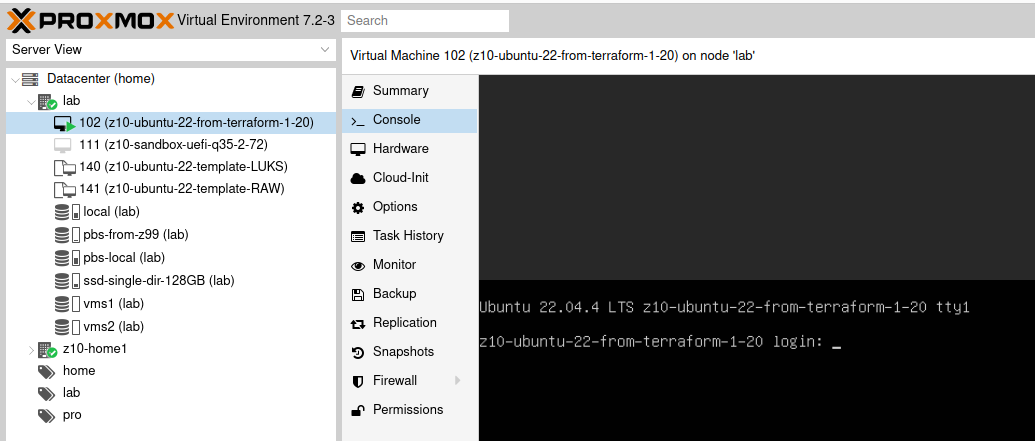

After successful Ubuntu installation system will reboot and convert itself into template so it can be later used as a base for further systems, either as linked clone or full clone. If you prefer having great elasticity then opt for full clone, because you will not have any constraints and limitations concerning VM usage and migration.

Deploy multiple Ubuntu VMs with Terraform

To use Proxmox VM template and create new VM upon it you can do it manually from Proxmox UI. However in case of creating 100 VMs it could take a while. So there is this Terraform utility, which with help of some plugins is able to connect to Proxmox and automate this process for you.

Define Terraform file (.tf) with terraform, provider and resource sections. Terraform section tell Terraform which plugins you are going to use. Provider section tells how to access Proxmox virtualization environment. Finally, resource section where you put all the configuration related to your Ubuntu 22.04-4 backed up with cloud-init. So we start with terraform and required provider plugins. It depends on Proxmox version whever it is 7 or 8 you will be need to give different resource configuration:

terraform {

required_providers {

proxmox = {

source = "telmate/proxmox"

version = "2.9.0" # this version has the greatest compatibility

}

}

}

Next you place Proxmox provider. It is also possible to define all sensitive data as variables:

provider "proxmox" {

pm_api_url = "https://192.168.2.10:8006/api2/json"

pm_user = "root@pam"

pm_password = "xxx"

pm_tls_insecure = true

}

First you need to initialize Terraform “backend” and install plugins. You can do this with terraform and provider sections only if you would want. You can also do it after you complete your full spec of tf file.

terraform init

Finally, the resource itself:

resource "proxmox_vm_qemu" "ubuntu_vm" {

name = "z10-ubuntu-22-from-terraform-1-20"

target_node = "lab"

clone = "z10-ubuntu-22-template-RAW"

memory = 4000

cores = 2

network {

bridge = "vmbr0"

model = "virtio"

}

disk {

slot = 0

storage = "vms1"

size = "10G"

type = "virtio"

}

os_type = "cloud-init"

ipconfig0 = "ip=192.168.2.20/22,gw=192.168.1.1"

ciuser = "xxx"

cipassword = "xxx"

}

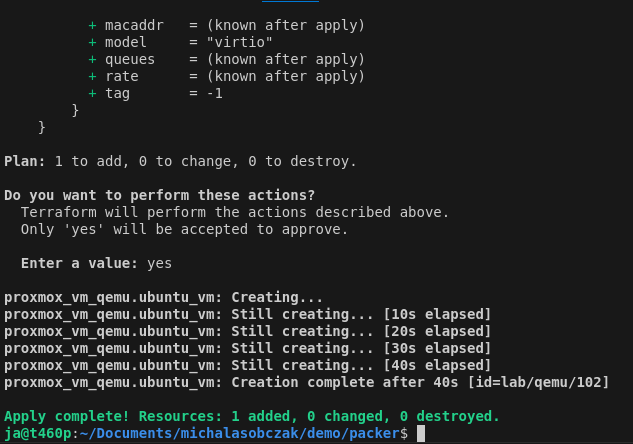

To run this terraform script you first check it with plan command and execute with apply command:

terraform plan

terraform apply

With that, this mechanism is going to fully clone template as new virtual machine with given cloud-init definitions concering user and network configuration.

I prepared two sample templates, one with LUKS disk encryption and the other one without LUKS encryption. For demo purposes it is enough to use unencrypted drive however for production use it should be your default way of installating operating systems.

Checkpoint: we have created Ubuntu template with Packer and use this template to create new VM using Terraform.

Further reading

- https://github.com/Telmate/terraform-provider-proxmox/tree/v2.9.0

- https://registry.terraform.io/providers/Telmate/proxmox/2.9.0/docs/resources/vm_qemu