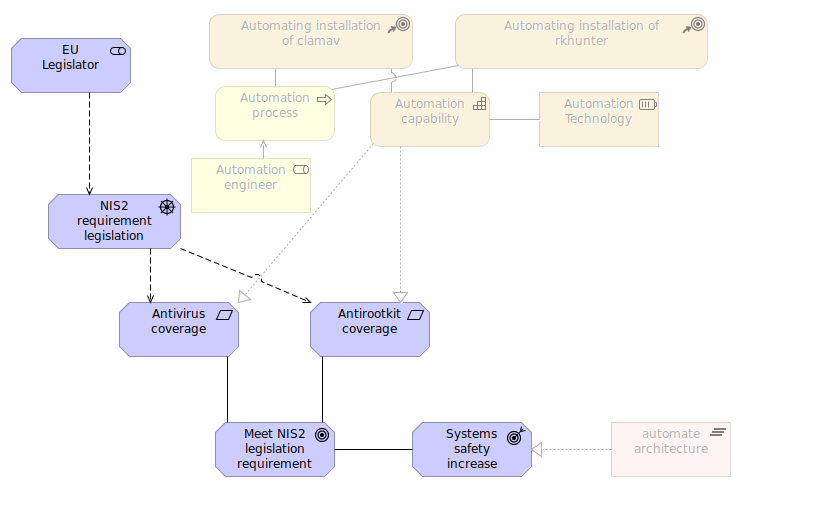

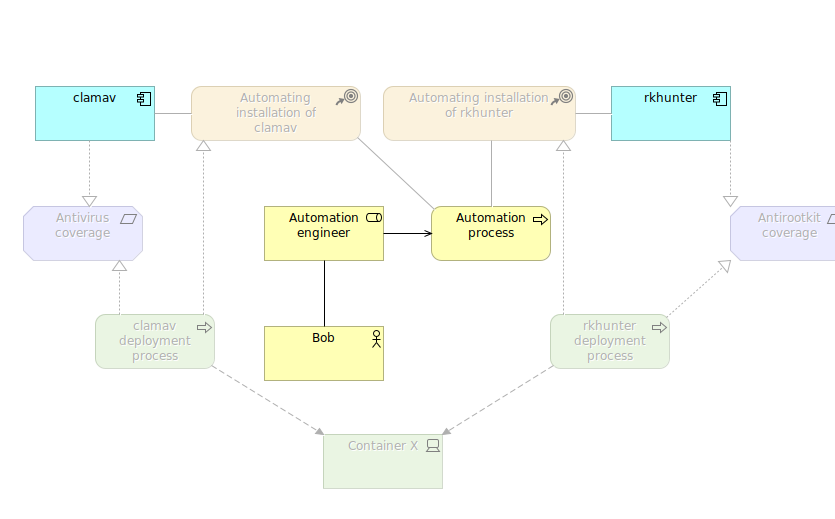

If you run digital services platform or critical infrastructure then most probably you are covered by NIS 2 and its requirements including those concerning information security. Even if you are not covered by NIS 2, then still you may benefit from its regulations which seem to be similar with those coming from ISO 27001. In this article I show how to automatically deploy anti-rootkit and anti-virus software for your Linux workstations and servers.

TLDR

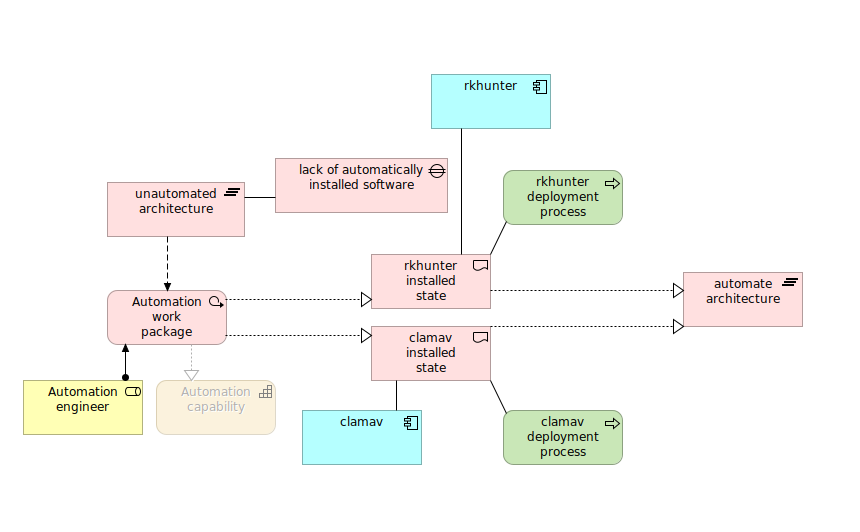

By using rkhunter anti-rootkit and ClamAV anti-virus you are closer to NIS 2 and ISO 27001 and farther away from threats like cryptocurrency miners and ransomware. You can automate deployment with Ansible.

Course of action

- Prepare Proxmox virtualization host server

- Create 200 LXC containers

- Start and configure containers

- Install rkhunter and scan systems

- Install ClamAV and scan systems

What is NIS 2?

The NIS 2 Directive (Directive (EU) 2022/2555) is a legislative act that aims to achieve a high common level of cybersecurity across the European Union. Member States must ensure that essential and important entities take appropriate and proportionate technical, operational and organisational measures to manage the risks posed to the security of network and information systems, and to prevent or minimise the impact of incidents on recipients of their services and on other services. The measures must be based on an all-hazards approach.

source: https://www.nis-2-directive.com/

Aside from being a EU legislation regulation, NIS 2 can be benefication from security point of view. However, not complying with NIS 2 regulations will cause significant damages to organization budget.

Non-compliance with NIS2 can lead to significant penalties. Essential entities may face fines of up to €10 million or 2% of global turnover, while important entities could incur fines of up to €7 million or 1.4%. There’s also a provision that holds corporate management personally liable for cybersecurity negligence.

source: https://metomic.io/resource-centre/a-complete-guide-to-nis2

What are the core concepts of NIS 2?

To implement NIS 2 you will need to cover various topics concernig technology and its operations, such as:

- Conduct risk assesment

- Implement security measures

- Set up supply chain security

- Create incident response plan

- Perform regular cybersecurity awareness and training

- Perform regular monitoring and reporting

- Plan and perform regular audits

- Document processes (including DRS, BCP etc)

- Maintain compliance by review & improve to achieve completeness

Who should be interested?

As NIS 2 requirements implementation impacts on businesses as whole, the point of interest should be in organizations in various departments, not only IT but technology in general as well as business and operations. From employees perspective they will be required to participate in trainings concerning cybersecurity awareness. In other words, NIS 2 impacts on whole organization.

How to define workstation and server security

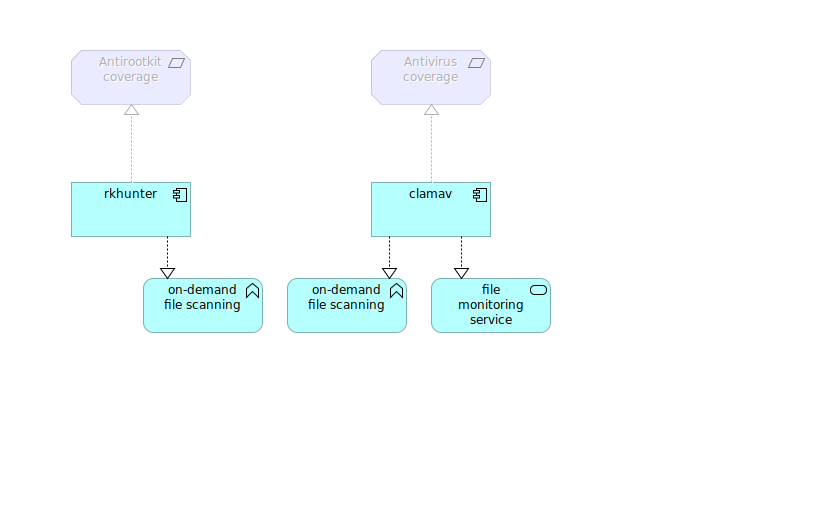

We can define workstation as a desktop or laptop computer which is physically available to its user. On the other hand we can define a server as a computing entity which is intended to offload workstation tasks as well as provide multi-user capabilities. So can describe a server also as a virtual machine or system container instance (such as LXC).

The security concepts within both workstations and servers are basically the same as they do share many similarities. They both run some operating system with some kind of kernel inside. They both run system level software along with user level software. They are both vulnerable to malicious traffic, software and incoming data especially in form of websites. There is major difference however impacting workstation users the most. It is the higher level of variability of tasks done on computer. However, even with less variable characteristics of server tasks, a hidden nature of server instances could lead lack of visibility of obvious threats.

So, both workstation and server should run either EDR (Endpoint Detection and Response), or antivirus as well as anti-rootkit software. Computer drives should be encrypted with LUKS (or BitLocker in case of Windows). Users should run on least-privileged accounts not connecting to unknown wireless networks and not inserting unknown devices to computer input ports (like USB devices which could be keyloggers for instance).

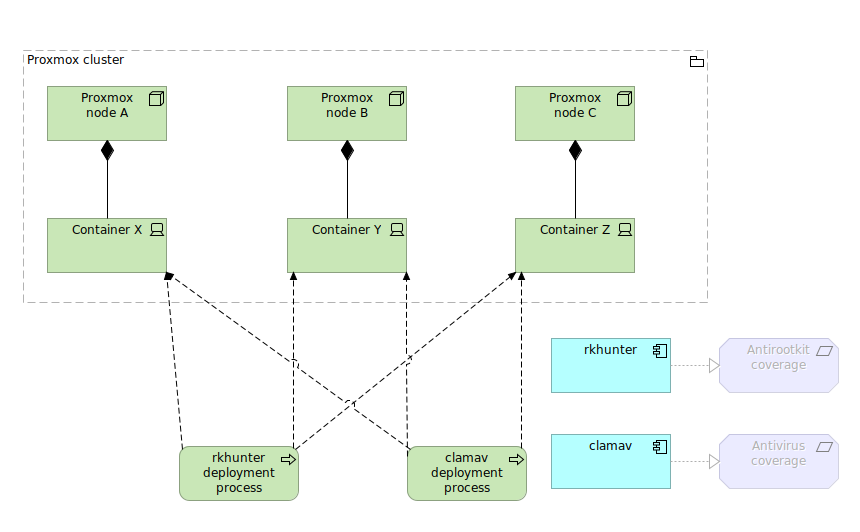

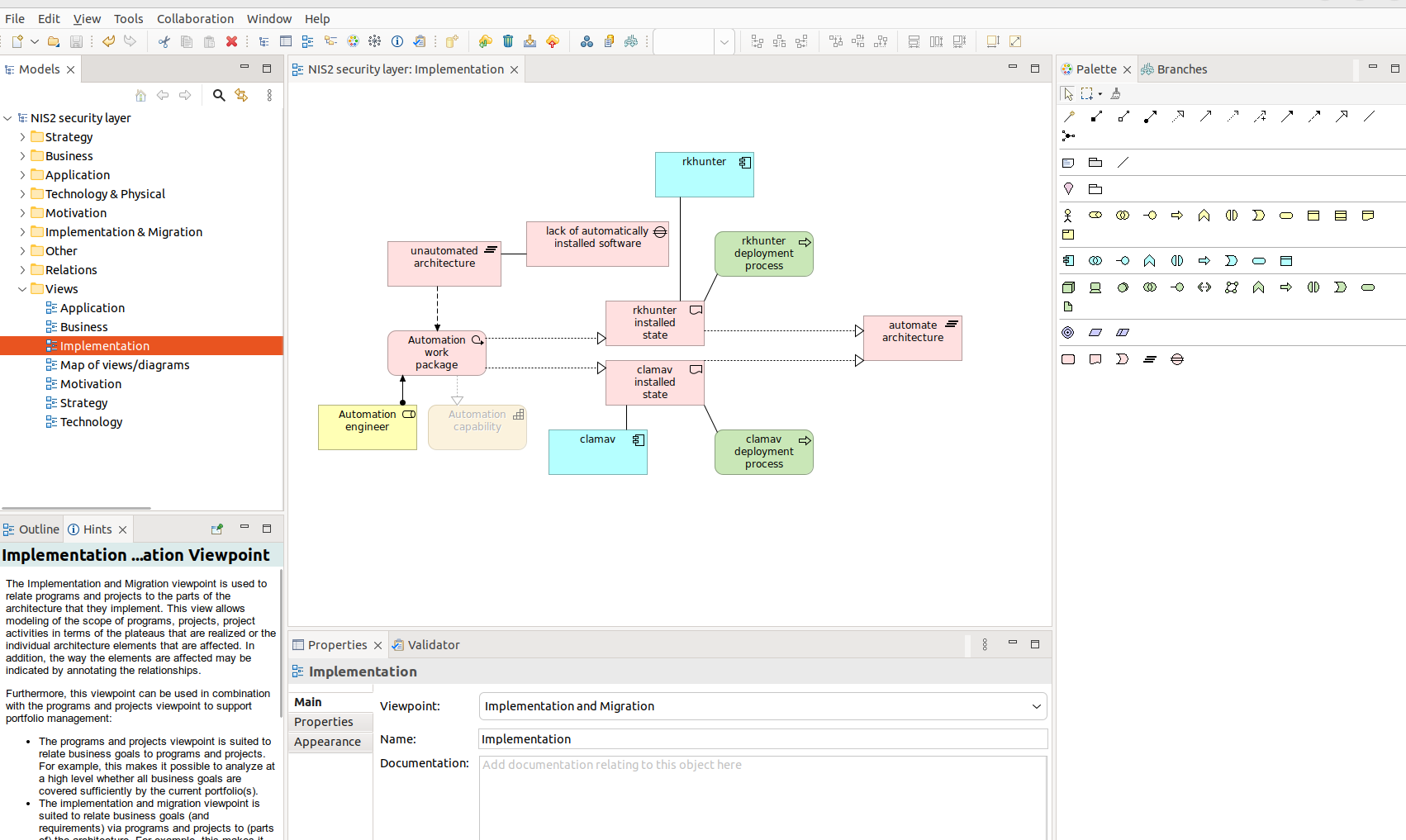

Prepare 200 LXC containers on Proxmox box

Find how to install 200 LXC containers for testing purposes and then, using Ansible, how to install and execute anti-rootkit and anti-virus software, rkhunter and ClamAV respecitvely. Why to test on that many containers you may ask? In case of automation it is necessary to verify performance ability on remote hosts as well as how we identify automation results on our side. In our case those 200 containers will be placed on single Proxmox node so it is critically important to check if it is going to handle that many of them.

Ansible software package gives us ability to automate work by defining “playbooks” which are group of tasks using various integration components. Aside from running playbooks you can also run commands without file-based definitions. You can use shell module for instance and send commands to remote hosts. There is wide variety of Ansible extensions available.

System preparation

In order to start using Ansible with Proxmox you need to install “proxmoxer” Python package. To do this Python PIP is required.

apt update

apt install pip

pip install proxmoxer

To install Ansible (in Ubuntu):

sudo apt-add-repository ppa:ansible/ansible

sudo apt update

sudo apt install ansible

Then in /etc/ansible/ansible.cfg set the following setting which skips host key check during SSH connection.

[defaults]

host_key_checking = False

Containers creation

Next define playbook for containers installation. You need to pass Proxmox API details, your network configuration, disk storage and pass the name of OS template of your choice. I have used Ubuntu 22.04 which is placed on storage named “local”. My choice for target container storage is “vms1” with 1GB of storage for each container. I loop thru from 20 to 221.

The inventory for this one should contain only the Proxmox box on which we are going to install 200 LXC containers.

---

- name: Proxmox API

hosts: proxmox-box

vars:

ansible_ssh_common_args: '-o ServerAliveInterval=60'

serial: 1

tasks:

- name: Create new container with minimal options

community.general.proxmox:

node: lab

api_host: 192.168.2.10:8006

api_user: root@pam

api_token_id: root-token

api_token_secret: TOKEN-GOES-HERE

password: PASSWORD-GOES-HERE

hostname: "container-{{ item }}"

ostemplate: 'local:vztmpl/ubuntu-22.04-standard_22.04-1_amd64.tar.zst'

force: true

disk: "vms1:1"

netif:

net0: "name=eth0,gw=192.168.1.1,ip=192.168.2.{{item}}/22,bridge=vmbr0"

cores: 2

memory: 4000

loop: "{{ range(20, 221) }}"

And then run this playbook to install containers:

ansible-playbook containers-create.yml -i inventory.ini -u root

Start and configure containers

In order to start those newly created containers (run it on Proxmox box), use shell loop with pct command:

for i in `pct list | grep -v "VMID" | cut -d " " -f1 `;

do

pct start $i;

echo $i;

done

To help yourself with generating IP addresses for your containers you can use “prips”:

apt install prips

prips 192.168.2.0/24 > hosts.txt

For demo pursposes only: next, you enable root user SSH login as it is our only user so far and it cannot login. In a daily manner you should use unprivileged user. Use shell loop and “pct” command:

for i in `pct list | grep -v "VMID" | cut -d " " -f1 `;

do

pct exec $i -- sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/g' /etc/ssh/sshd_config;

echo $i;

done

for i in `pct list | grep -v "VMID" | cut -d " " -f1 `;

do

pct exec $i -- service ssh restart;

echo $i;

done

Checkpoint: So far we have created, started and configured 200 LXC containers to run further software intallation.

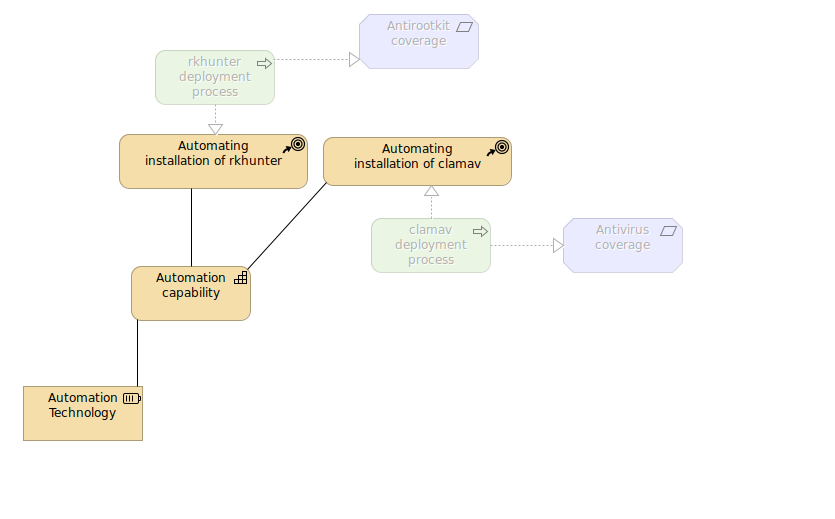

rkhunter: anti-rootkit software deployment

You may ask if this anti-rootkit is real world use case? Definitely it is. From my personal experience I can say that even using (or especially, rather) well known brands for you systems layer like public cloud operators you can face with risk of having open vulnerabilites. Cloud operators or any other digital services providers often rely on content from third party providers. So effectively quality and security level is as good as those third parties provided. You can expect to possibly receive outdated and unpatched software or open user accounts etc. This can lead to system breaches which then could lead to data steal, ransomware, spyware or cryptocurrency mining and many more.

There are similarities between anti-rootkit and anti-virus software. rkhunter is much more target at specific use cases so instead of checking hundred thousands of virus signatures it looks for well known hundreds of signs of having rootkits present in your system. You can then say that is a specialized form of anti-virus software.

Installation of anti-rootkit

First install rkhunter with the following playbook:

---

- name: install rkhunter

hosts: all

tasks:

- name: Install rkhunter Ubuntu

when: ansible_distribution == "Ubuntu"

ansible.builtin.apt:

name: rkhunter

state: present

- name: Install epel-release CentOS

when: ansible_distribution == "CentOS"

ansible.builtin.yum:

name: epel-release

state: present

- name: Install rkhunter CentOS

when: ansible_distribution == "CentOS"

ansible.builtin.yum:

name: rkhunter

state: present

Execute it with Ansible:

ansible-playbook rkhunter-install.yml -i hosts.txt -u root

Scanning systems with anti-rootkit

And then scan with rkhunter:

---

- name: Run rkhunter

hosts: all

tasks:

- name: Run rkhunter

ansible.builtin.command: rkhunter -c --sk -q

register: rkrun

ignore_errors: true

failed_when: "rkrun.rc not in [ 0, 1 ]"

Execute it with Ansible:

ansible-playbook rkhunter-run.yml -i hosts.txt -u root

To verify results it is much easier to run it separately using ansible command instead of ansible-playbook which runs playbooks:

ansible all -i hosts.txt -m shell -a "cat /var/log/rkhunter.log | grep Possible | wc -l" -u root -f 12 -o

Results interpretation and reaction

What in case if you see some “Possible rootkits”? First of all calm down and follow incident management procedure, if have such.

192.168.2.23 | CHANGED | rc=0 | (stdout) 0

192.168.2.31 | CHANGED | rc=0 | (stdout) 0

192.168.2.26 | CHANGED | rc=0 | (stdout) 0

192.168.2.29 | CHANGED | rc=0 | (stdout) 0

192.168.2.24 | CHANGED | rc=0 | (stdout) 0

192.168.2.27 | CHANGED | rc=0 | (stdout) 0

192.168.2.22 | CHANGED | rc=0 | (stdout) 0

192.168.2.28 | CHANGED | rc=0 | (stdout) 0

192.168.2.21 | CHANGED | rc=0 | (stdout) 0

192.168.2.20 | CHANGED | rc=0 | (stdout) 0

192.168.2.25 | CHANGED | rc=0 | (stdout) 0

If you do not have proper procedure, then follow the basic escalation path within your engineering team. Before isolating the possibly infected system, first check if it is not a false-positive alert. There are plenty of situations when tools like rkhunter will detect something unusual. It can be Zabbix Proxy process with some memory alignment or script replacement for some basic system utilities such as wget. However if rkhunter finds well known rootkit then you should start shutting system down or isolate it at least. Or take any other planned action for such situations.

If you found single infection within your environment then there is high chance that other systems might be infected also, and you should be ready to scan all accessible things over there, especially if you have password-less connection between your servers. For more about possible scenarios look for MITRE ATT&CK knowledge base and framework.

ClamAV: anti-virus deployment

What is the purpose of having anti-virus in your systems? Similar to anti-rootkit software, a anti-virus utility keep our system safe and away from common threats like malware, adware, keyloggers etc. However it has got much more signatures and scans everything, so the complete scan takes lot longer than in case of anti-rootkit software.

Installation of anti-virus

First, install ClamAV with the following playbook:

---

- name: ClamAV

hosts: all

vars:

ansible_ssh_common_args: '-o ServerAliveInterval=60'

tasks:

- name: Install ClamAV

when: ansible_distribution == "Ubuntu"

ansible.builtin.apt:

name: clamav

state: present

- name: Install epel-release CentOS

when: ansible_distribution == "CentOS"

ansible.builtin.yum:

name: epel-release

state: present

- name: Install ClamAV CentOS

when: ansible_distribution == "CentOS"

ansible.builtin.yum:

name: clamav

state: present

Then execute this playbook:

ansible-playbook clamav-install.yml -i hosts.txt -u root

With each host containing ClamAV there is clamav-freshclam service which is tool for updating virus signatures databases locally. There are rate limits. It is suggested to set up a private mirror by using “cvdupdate” tool. If you leave as it is, there might be a problem when all hosts ask at the same time resulting in race condition. You will be blocked for some period of time. If your infrastructure consists of various providers, then you should go for multiple private mirrors.

Scanning systems with anti-virus

You can either scan particular directory or complete filesystem. You could either run scan from playbook, but you can run it promply using ansible command without writing playbook. If seems that ClamAV anti-virus, contrary to rkhunter, returns less warnings and thus it is much easier to manually interpret results without relying on return codes.

ansible all -i hosts.txt -m shell -a "clamscan --infected -r /usr | grep Infected" -v -f 24 -u root -o

You can also run ClamAV skipping /proc and /sys folders which hold virtual filesystem of a hardware/software communication.

clamscan --exclude-dir=/proc/* --exclude-dir=/sys/* -i -r /

There is possiblity to install ClamAV as a system service (daemon), however it will be much harder to accomplish as there might be difficulties with AppArmor (or similar solution) and file permissions. It will randomly put load on your systems, which is not exactly what we would like to experience. You may prefer to put scans in cron schedule instead.

Please note: I will not try to tell you to disable AppArmor as it will be conflicting with NIS 2. Even more, I will encourage you to learn how to deal with AppArmor and SELinux as they are required by various standards like DISA STIG.

To run ClamAV daemon it is requied to have main virus database present in your system. Missing this one prevents this service from startup and it is directly linked with freshclam service.

○ clamav-daemon.service - Clam AntiVirus userspace daemon

Loaded: loaded (/lib/systemd/system/clamav-daemon.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/clamav-daemon.service.d

└─extend.conf

Active: inactive (dead)

Condition: start condition failed at Mon 2024-09-02 15:24:40 CEST; 1s ago

└─ ConditionPathExistsGlob=/var/lib/clamav/main.{c[vl]d,inc} was not met

Docs: man:clamd(8)

man:clamd.conf(5)

https://docs.clamav.net/

Results interpretation and reaction

Running clamscan will give us this sample results:

Using /etc/ansible/ansible.cfg as config file

192.168.2.33 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.23 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.28 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.42 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.26 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.29 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.38 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.45 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.40 | CHANGED | rc=0 | (stdout) Infected files: 0

192.168.2.32 | CHANGED | rc=0 | (stdout) Infected files: 0

As it is a manual scan, it will be straightforward to identify possible threats. In case of automatic scan or integration with Zabbix you will need to learn what clamscan could possibly output, same as with rkhunter output.

Conclusion

Automation in the form of Ansible can greatly help in anti-rootkit and anti-virus software deployment, rkhunter and ClamAV respectively. These tools will for sure increase the level of security in your environment if will cover all the systems up and running. Having automation itself is not required by NIS 2 directly, however in positively impacts for future use.