Instead of using dedicated Hetzner servers you can use their cloud solutions for less demanding use case scenarios. The idea is to have one server for pfSense and the other one for regular private use, hidden behind gateway. It will be little bit cheaper than renting dedicated server as CX22 costs around 3 – 4 Euro per month comparing to 50 Euro at least for dedicated server. You can have up to 10 virtual cloud server in a price of single dedicated server. Choose cloud for “simple” (or rather straightforward) solutions, dedicated servers for much complicated setups with much more demand for CPU and RAM usage.

Asssuming you use locally Ubuntu 22 or 24.

Initial setup

First install brew. In case of missing python packages, install it with system packages with python- prefix.

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

Install shell configuration:

echo 'eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"' >> /home/USER/.bashrc

eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

Then install hcloud:

brew install hcloud

hcloud is used to manage Hetzner configuration from command line. In order to use it, create user token and create context.

Create cloud servers and networks

Create servers:

hcloud server create --name router --type cx22 --image ubuntu-24.04

hcloud server create --name private-client --type cx22 --image ubuntu-24.04

Create private network:

hcloud network create --name nat-network --ip-range 10.0.0.0/16

hcloud network add-subnet nat-network --network-zone eu-central --type server --ip-range 10.0.0.0/24

And attach servers to this network:

hcloud server attach-to-network router --network nat-network --ip 10.0.0.2

hcloud server attach-to-network private-client --network nat-network

Now important thing. In order to route private network traffic you need to add special 0.0.0.0/0 route to gateway. Now it seems that it can be only done using hcloud as web UI do not allow it:

hcloud network add-route nat-network --destination 0.0.0.0/0 --gateway 10.0.0.2

pfSense installation

This is second thing which is little complicated if you want to do it via web UI. It is highly recommended to do this via hcloud. Download pfSense packge, decompress it and pass ISO image as parameter:

hcloud server attach-iso router pfSense-CE-2.7.2-RELEASE-amd64.iso

hcloud server reset router

Now do regular pfSense installation and detach ISO:

hcloud server detach-iso router

hcloud server reset router

After rebooting pfSense:

- configure WAN as vtnet0

- configure LAN as vtnet1

- uncheck Block bogon networks

- set LAN as DHCP

- set LAN System – Routing – Static routes from 10.0.0.0/16 to 10.0.0.1

- disable hardware checksum offload in System – Advanced – Networking

- set NAT outbound to hybrid mode

- set WAN NAT outbound from 10.0.0.0/16 to any addressing WAN address

- change LAN firewall catch all rule as from any instead of LAN subnets

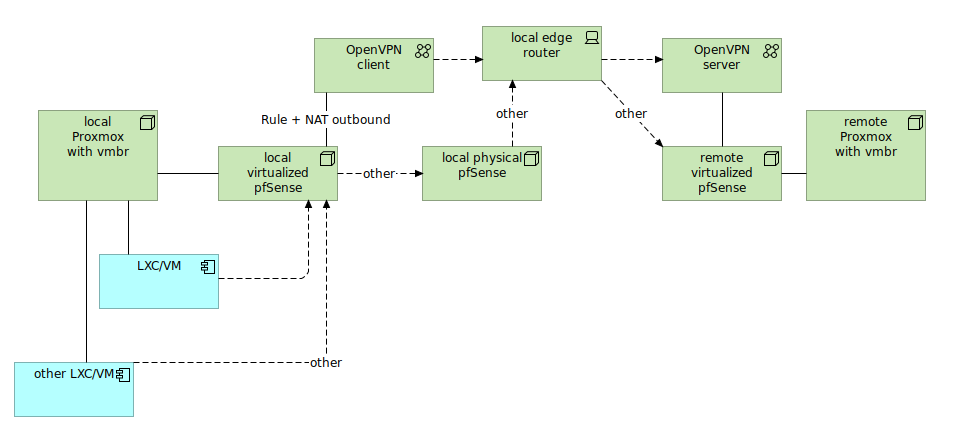

Routing traffic

Once you have your pfSense router setup you need to pass traffic from private-client server to 10.0.0.1, which is Hetzner’s default gateway in every private network. We defined earlier that we want to pass all traffic via router (10.0.0.2), but it will be done in Hetzner router which will pass traffic from private-client to router and then to final destination. So we do not pass traffic directly to pfSense gateway n such configuration.

It is important to uncheck blocking bogon networks as our router will be passing private traffic in WAN interface. Setting WAN NAT outbonud is also critical in order to have this routing working. Finally, pfSense adds default LAN catch all rule, which needs to be modifyed to apply all sources and not only LAN, which allows routing traffic via Hetzner gateway.

Beforementioned aspects are outside of regular pfSense configuration as in dedicated servers, because here we have private managed network which runs with different rules, so you need to follow those rule if you want to have pfSense acting as gateway.

Afterwords

Be sure of course to setup you own desired network addressing and naming conventions. If something is unclear, refer to official Hetzner documentation which can found here. After configuring private-client you can disable public networking. Switch it off and then unlink public IP address and power if on again. Now to access private-client either use Hetzner Cloud console or setup OpenVPN in your fresh pfSense installation.