slight utlization drop when dealing with multi GPU setup

TLDR

Power usage and GPU utilization varies between single GPU models and multi GPU models. Deal with it.

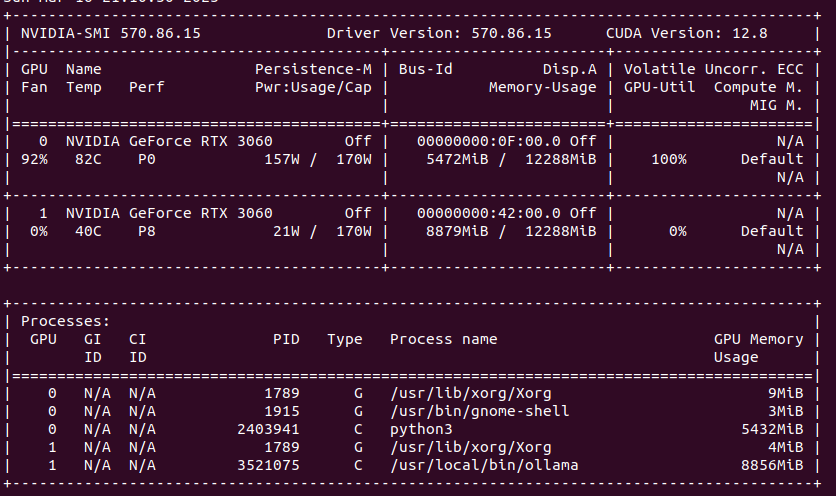

My latest finding is that single GPU load in Ollama/Gemma or Automatic1111/StableDiffusion is higher than using multiple GPUs load with Ollama when model does not fit into one GPU’s memory. Take a look. GPU utilization of Stable Diffusion is at 100% with 90 – 100% fan speed and temperature over 80 degress C.

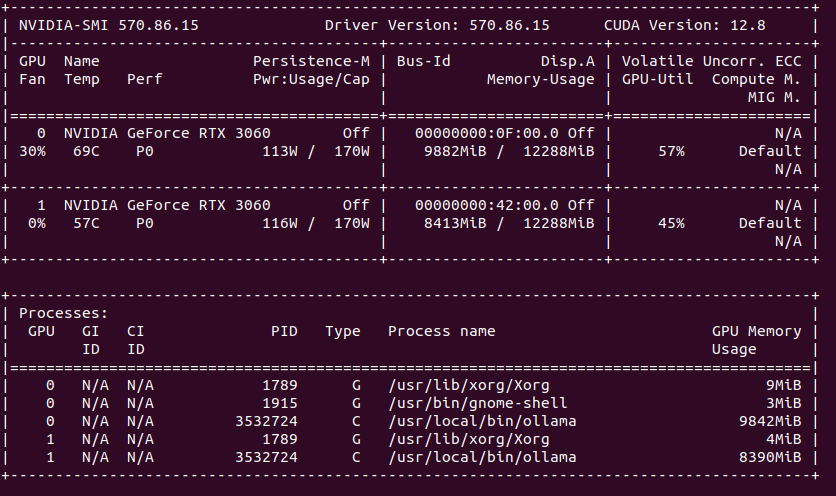

Compare this to load spread across two GPUs. You can clearly see that GPU utilization is much lower, as well as fan speed, temperatures are also lower. In total, power usage is higher comparing to single GPU models.

What does it mean? Ollama uses only that number of GPU which is required, not using all hardware all the time, so this is not something that we can compare to. However it may imply slight utlization drop when dealing with multi GPU setup.