What is OpenVINO?

“Open-source software toolkit for optimizing and deploying deep learning models.”

It is developed by Intel since 2018. It supports LLM, computer vision and generative AI. It runs on Windows, Linux and MacOS. As for Ubuntu, it is recommended to run on 22.04 LTS and higher. It utilizes OpenCL drivers.

In theory, libraries using OpenCL (such as OpenVINO) should be cross platform contrary to vendor-locked similar solutions like CUDA. In theory OpenVINO should then work on both Intel and AMD hardware. Internet says that is works, but as for now I need to order some additional hardware to check it out on my own.

Side note: Why CUDA/NVIDIA vendor-lock is bad? You are forced to buy hardware from single vendor, so you are prone to price increase and if whole concept would fail to are left with nothing. That is why open standards are better then closed ones.

OpenCL readings

For polish language speakers I recommend checking out my book covering OpenCL. There are also various articles which you can check out here.

Requirements

You can run OpenVINO on Intel Core Ultra series 1 and 2, Xeon 6, Atom X, Atom with SSE 4.2, various Pentium processors. By far the most important is that it runs on Intel Core gen 6 onwards. It is supported by Intel Arc GPU also, but in term of GPUs it is supported by Intel HD, UHD, Iris Pro, Iris Xe, Iris Xe Max. Especially compatibility with Core 6 gen and integrated HD, UHD graphics is the most crucial.

Intel OpenVINO documentation says also that it supports Intel Neural Processing Unit, in short NPU.

VMMX instruction set is available since 10th Intel CPU generation. AMX instruction set is available since 12th Intel CPU generation. Both VMMX and AMX highly increses speed and thruput of inference.

OpenVINO base GenAI package

wget https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB

sudo gpg --output /etc/apt/trusted.gpg.d/intel.gpg --dearmor GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB

echo "deb https://apt.repos.intel.com/openvino/2025 ubuntu22 main" | sudo tee /etc/apt/sources.list.d/intel-openvino-2025.list

sudo apt update

sudo apt install openvino

Run Frigate video surveillance with OpenVINO object detector

In Linux, Ubuntu 22 particularly, Intel devices are exposed thru /dev/dri devices, which are Direct Rendering Infrastructure. It is Linux framework present since 1998. The latest version DRI-3.0 comes from 2013.

We can test OpenVINO runtime/libraries and thus Intel hardware using Frigate, DRI device and Docker container. Here is Docker container specification:

cd

mkdir frigate-config

mkdir frigate-media

sudo docker run -d \

--name frigate \

--restart=unless-stopped \

--stop-timeout 30 \

--mount type=tmpfs,target=/tmp/cache,tmpfs-size=1000000000 \

--shm-size=1024m \

--device /dev/bus/usb:/dev/bus/usb \

--device /dev/dri/renderD128 \

-v ./frigate-media:/media/frigate \

-v ./frigate-config:/config \

-v /etc/localtime:/etc/localtime:ro \

-e FRIGATE_RTSP_PASSWORD='password' \

-p 8971:8971 \

-p 8554:8554 \

-p 8555:8555/tcp \

-p 8555:8555/udp \

ghcr.io/blakeblackshear/frigate:stable

We use frigate:stable image. For other detectors such as TensorRT (Tensor RunTime) we would use frigate:stable-tensorrt image.

After launching container open logs to grab admin password:

sudo docker logs $(sudo docker ps | grep frigate | cut -d ' ' -f 1) -f -n 100

It should look something like this:

Start with the following configuration file:

mqtt:

enabled: false

logger:

logs:

frigate.record.maintainer: debug

objects:

track:

- person

- car

- motorcycle

- bicycle

- bus

- dog

- cat

- handbag

- backpack

- suitcase

record:

enabled: true

retain:

days: 1

mode: all

alerts:

retain:

days: 4

detections:

retain:

days: 4

snapshots:

enabled: true

retain:

default: 7

quality: 95

review:

alerts:

labels:

- person

- car

- motorcycle

- bicycle

- bus

detections:

labels:

- dog

- cat

- handbag

- backpack

- suitcase

cameras:

demo:

enabled: true

ffmpeg:

inputs:

- path: rtsp://user:pass@ip:port/stream

roles:

- detect

- record

hwaccel_args: preset-vaapi

detect:

fps: 4

version: 0.15-1

semantic_search:

enabled: true

reindex: false

model_size: small

Frigate says that CPU detectors are not recommended. We did not define OpenVINO detector yet in Frigate configuration:

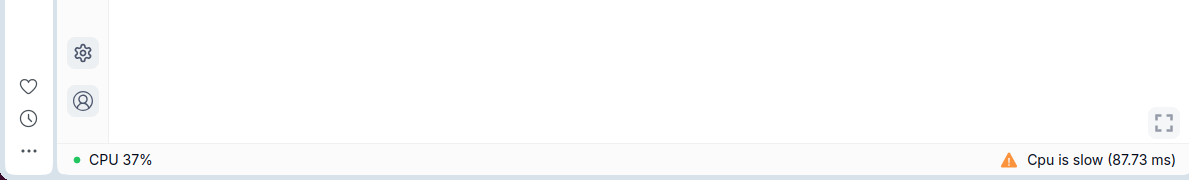

Frigate even warn us about the fact that CPU detection is slow:

So, now lets try with OpenVINO detectors:

detectors:

ov:

type: openvino

device: GPU

model:

width: 300

height: 300

input_tensor: nhwc

input_pixel_format: bgr

path: /openvino-model/ssdlite_mobilenet_v2.xml

labelmap_path: /openvino-model/coco_91cl_bkgr.txt

After restarting you should be able to see in logs:

2025-03-09 09:41:01.085519814 [2025-03-09 09:41:01] detector.ov INFO : Starting detection process: 430

Intel GPU Tools

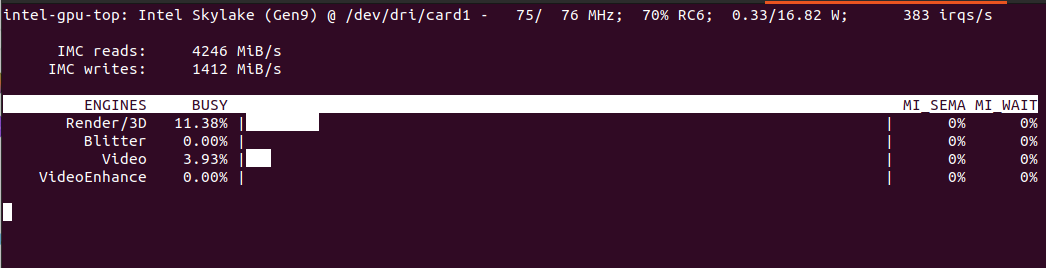

To verify CPU/GPU usage you can use intel-gpu-tools:

sudo apt install intel-gpu-tools

sudo intel_gpu_top

Will show something like this:

It should include both detection and video decoding as we set hwaccel_args: preset-vaapi in Frigate configuration. In theory it should use both Intel and AMD VAAPI.

Automatically detected vaapi hwaccel for video decoding

Computer without discrete GPU

In case we have computer with Intel CPU with iGPU you can specify the following configuration:

detectors:

ov_0:

type: openvino

device: GPU

ov_1:

type: openvino

device: GPU

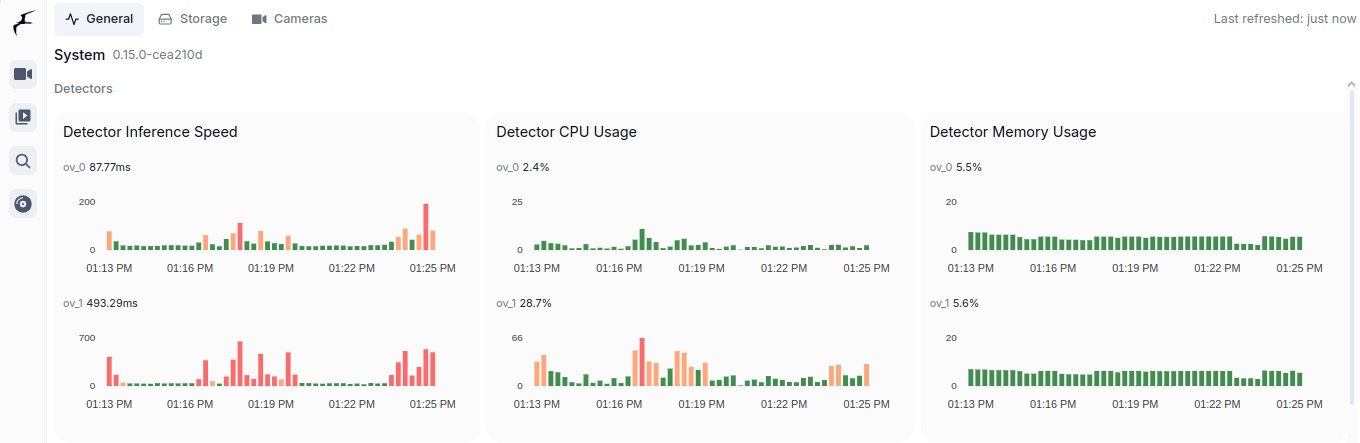

It will then use both iGPU (ov_0, fast one) and CPU (ov_1, slow one). As you can see is is interesing speed-wise (87ms vs 493ms). CPU utilization of course in case of CPU detector will be higher as CPU usage for iGPU is only for coordination. Both components show similar memory usage, which is still the RAM memory as iGPU shares it with CPU. Mine its Intel Core m3-8100y, 4 cores at 1.1GHz. It has Intel UHD 615 with 192 shader cores. It outputs 691 GFLOPS (less than 1 TFLOPS to be clear) with FP16.

You can see utilization in Frigate:

Conclusion

It is very important to utilize already available hardware and it is great thing that there is such framework like OpenVINO. There are tons of Intel Core processors with Intel HD and UHD integrated GPUs on the market. For such a use case scenario like Frigate object detection it is a perfect solution to offload detection from CPU to CPUs integrated GPU. It is much more convenient than Coral TPU USB module both in terms of installation as well as costs. You already have this GPU integrated present in your computer.