I thought of adding another Proxmox node to the cluster. Instead of having PBS on separate physical box I wanted to have it virtualized, same as on any other environment I setup. So I installed fresh copy of the same Proxmox VE version and tried to join the cluster. And then this message came:

Connection error 500: RPCEnvironment init request failed: Unable to load access control list: Connection refused

And plenty other regarding hostname, SSH keys, quorum etc. Finally Proxmox UI went broke as I was unable to sign in. Restarting cluster services ended with some meaningless information. So I was a little bit worried about the situation. Of cource I got backup of all things running on that box, but it would be annoying to bring it back from backup.

Solution, or rather monkey-patch for this is to shutdown this new node. On any other working node (preferably on leader) execute:

umount -l /etc/pve

systemctl restart pve-cluster

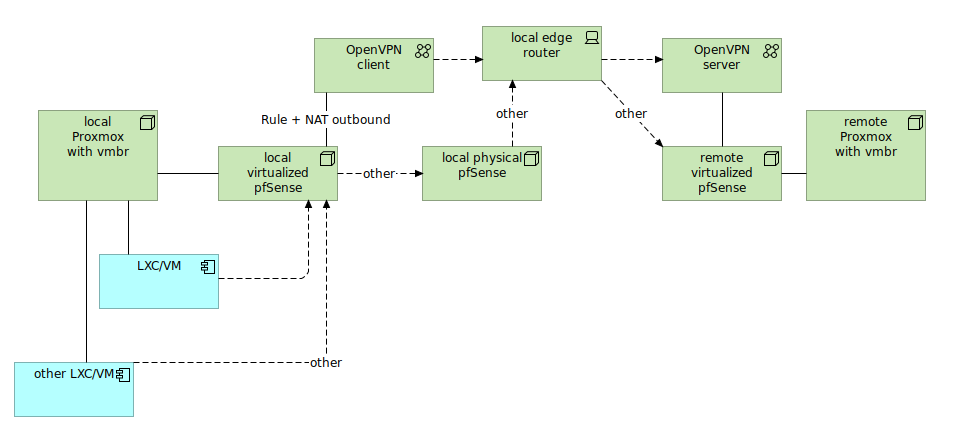

This command unomunts and remounts FUSE filesystem and then restarts PVE cluster service. What was the root cause of this? Actually I do not know for sure, but I suspect either networking or filesystem issue causing some discrepancies on distributed configuration. I even added all node domains to local pfSense resolved to be sure that it is not DNS issue. Another options is that 2 out of 4 nodes have additional network interface with bridge. Different filesystems (ZFS vs XFS) might be a problem, but I do not think so anymore, as 2 nodes already got ZFS and adding them to cluster was without any problems in the past.

I decided not to restart any nodes or separate them as it might cause permanent obstruction. So it stays as a mistery, with monkey-patch to have it running once again.