Did you know that you can use the Polish LLM Bielik from SpeakLeash locally, on your private computer? The easiest way to do this is LM Studio (from lmstudio.ai).

- download LM Studio

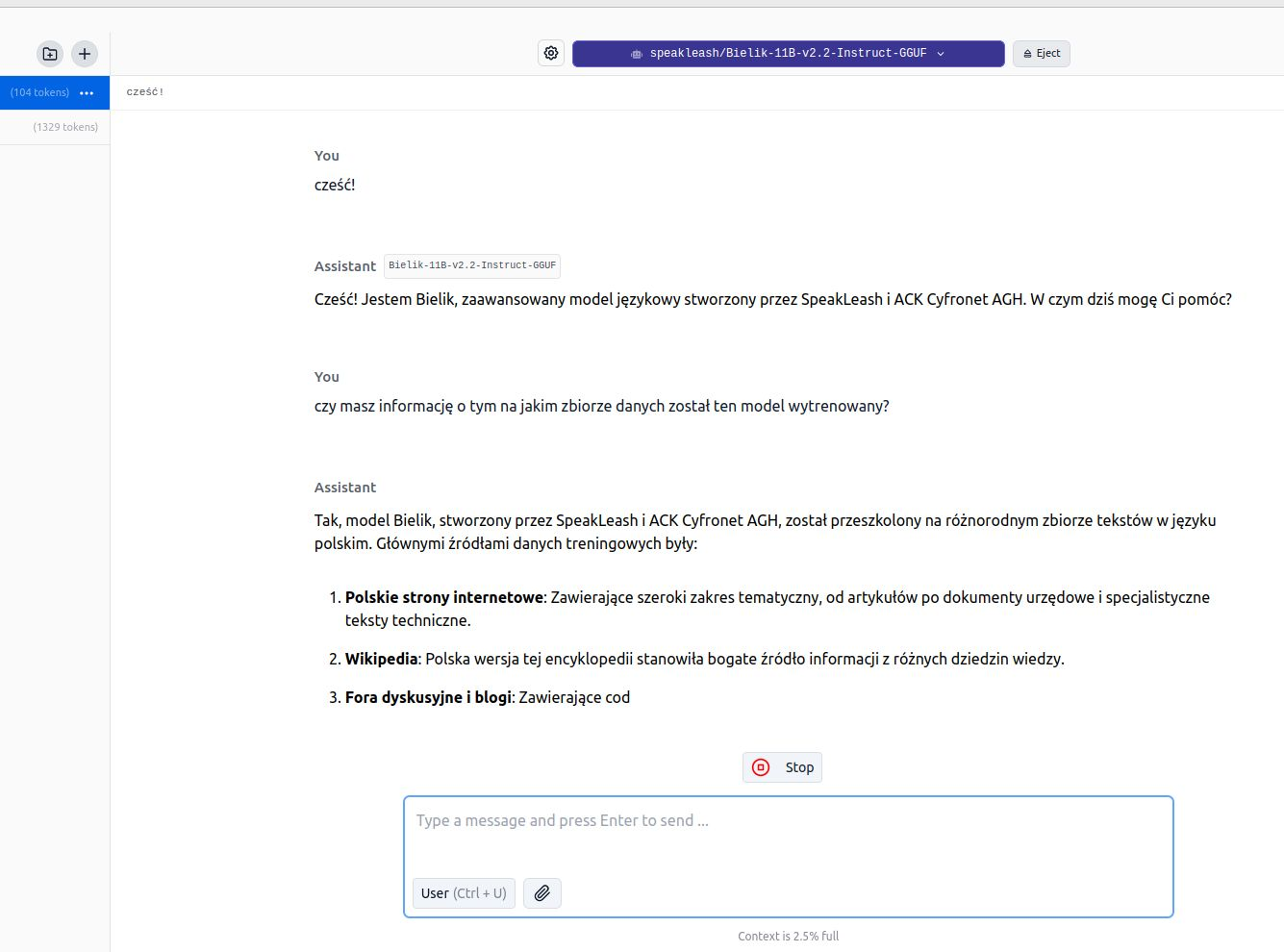

- download the model (e.g. Bielik-11B-v2.2-Instruct-GGUF)

- load model

- open a new conversation

- converse…

Why use a model locally? Just for fun. Where we don’t have internet. Because we don’t want to share our data and conversations etc…

You can run it on macOS, Windows and Linux. It requires support for AVX2 CPU instructions, a large amount of RAM and, preferably, a dedicated and modern graphics card.

Note: for example, on a Thinkpad t460p with i5 6300HQ with a dedicated 940MX 2GB VRAM card basically does not want to work, but on a Dell g15 with i5 10200h and RTX 3050Ti it works without any problem. I suspect that it is about Compute Capability and not the size of VRAM in the graphics card… because on my old datacenter cards (Tesla, Quadro) these models and libraries do not work.